If you only take away one thing from this article: When students wrote with ChatGPT, their brains showed weaker network coupling and they remembered less of what they had just written. Writing without digital help produced stronger, broader engagement. The study is early, but the signal is consistent.

What The Researchers Did

MIT’s team ran a four-month study with 54 student participants who wrote short SAT-style essays under three conditions: ChatGPT only, classic search engine, or “brain-only” with no tools. A fourth crossover session brought a subset back to switch conditions, and to see if there was any overlap in skills. Throughout the task the team recorded 32-channel EEG and later scored essays, analyzed language features, and interviewed the writers.

Signals from the EEG were sampled at 500 Hz using a Neuroelectrics Enobio 32. The team used multivariate autoregressive models in one-second windows and computed dynamic Directed Transfer Function (dDTF) to estimate functional connectivity across bands. Frequency bands analyzed included low and high alpha, beta, delta, and theta.

The Core Neural Result

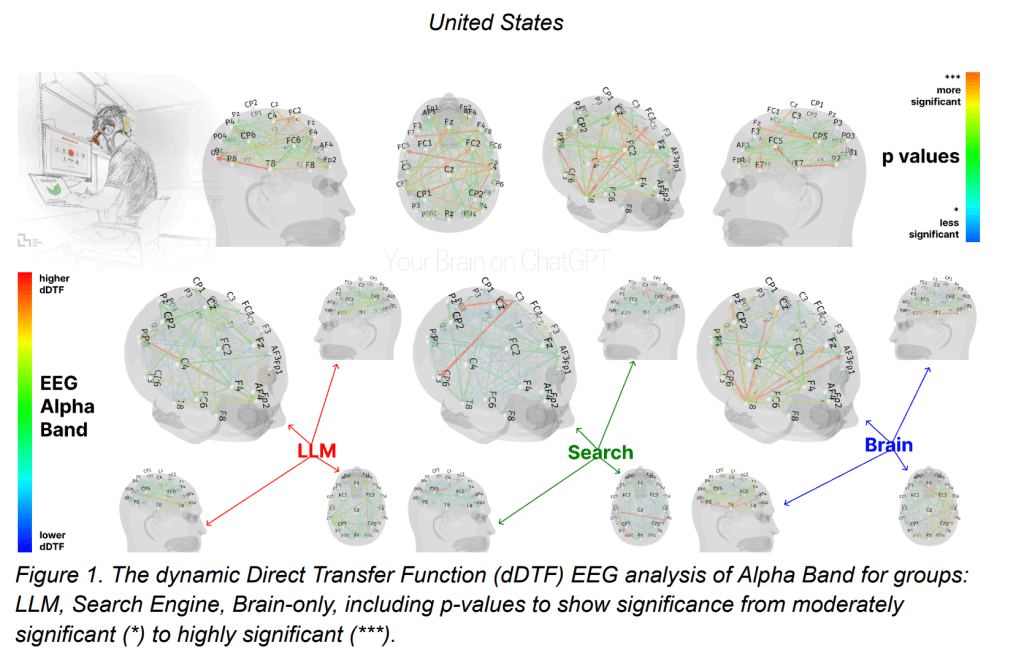

Across sessions, connectivity scaled inversely proportionally with how much outside help people used. Brain-only showed the strongest, widest-ranging networks. Search engine was intermediate. ChatGPT showed the weakest overall coupling. See the high-level alpha result in Figure 1.

ChatGPT vs brain-only. The largest differences appeared in alpha, a-band linked to internal attention and semantic processing. One example the paper calls out is a left-parietal to right-temporal pathway that was much stronger during “brain only” writing (p=0.0002). Overall, brain-only showed 79 significant alpha connections versus 42 for the ChatGPT group.

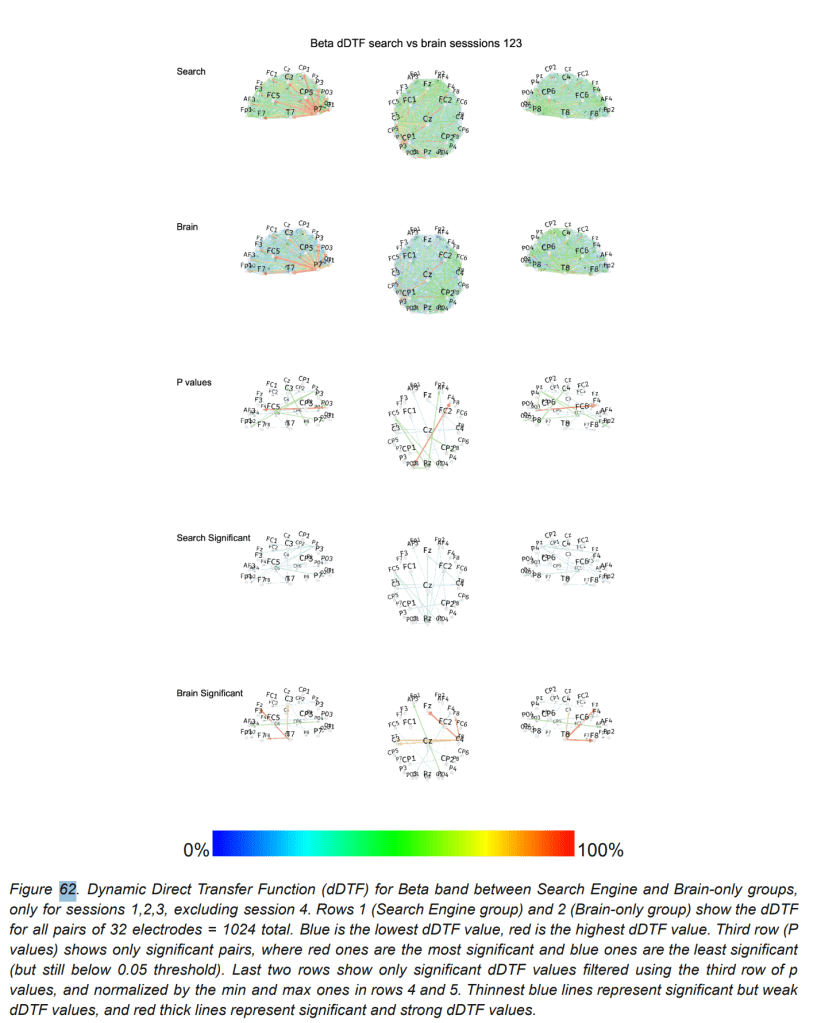

Search vs brain-only. Search engaged more parietal beta connectivity that fits visual scanning and motor coordination, while brain-only beta involved temporal hubs that fit language retrieval and internal organization. Theta favored brain-only as well, with far more fronto-parietal coupling into right frontal cortex. See Figure 62 for beta and the summary that follows.

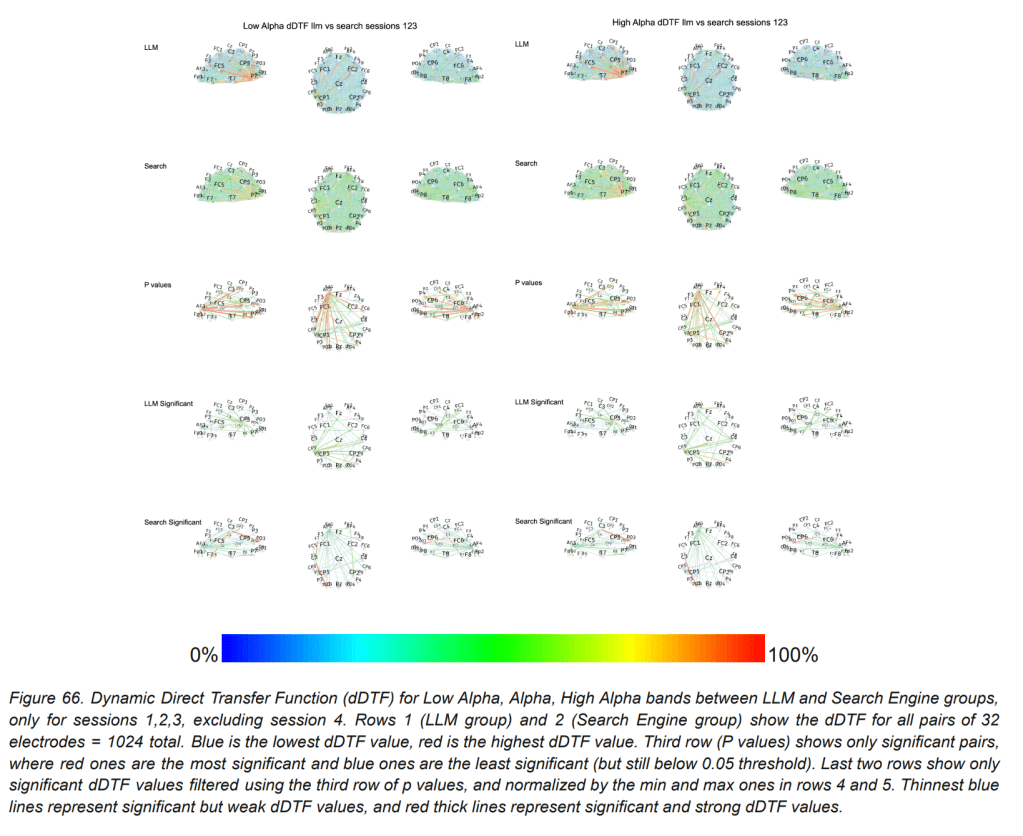

ChatGPT vs search. In alpha, overall strength was comparable, but network shape differed. Search sent more posterior inflow into prefrontal AF3, while high-alpha was slightly higher for the ChatGPT group. See Figure 66.

The Crossover Session

Session 4 matters because it tests the carryover or skills between groups. When the ChatGPT users wrote without AI, they showed under-engagement of alpha and beta networks and struggled to quote their own writing minutes later. When “brain-only” writers used ChatGPT, connectivity spiked across bands as they wrote the essay.

Active Recall After The Session.

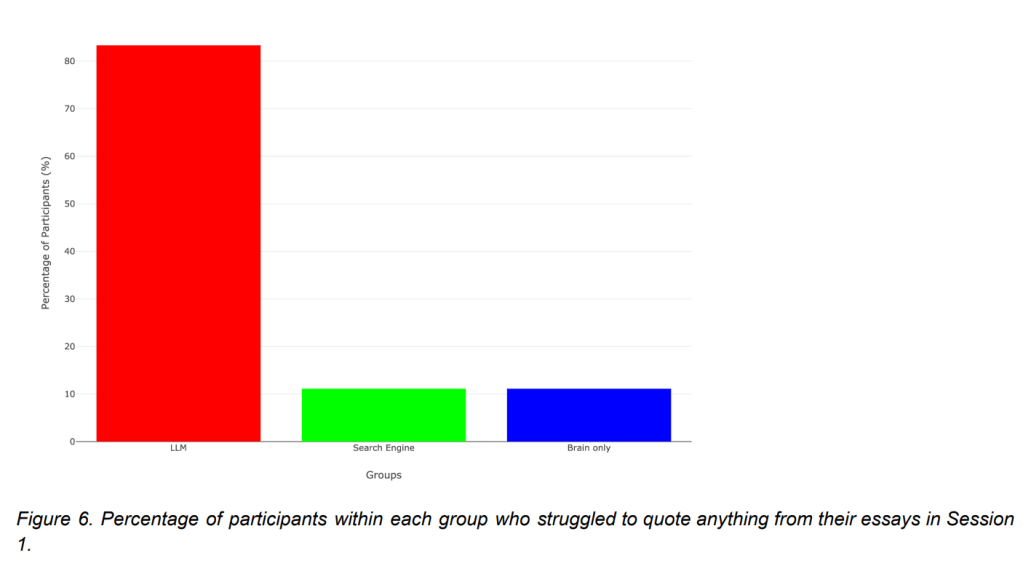

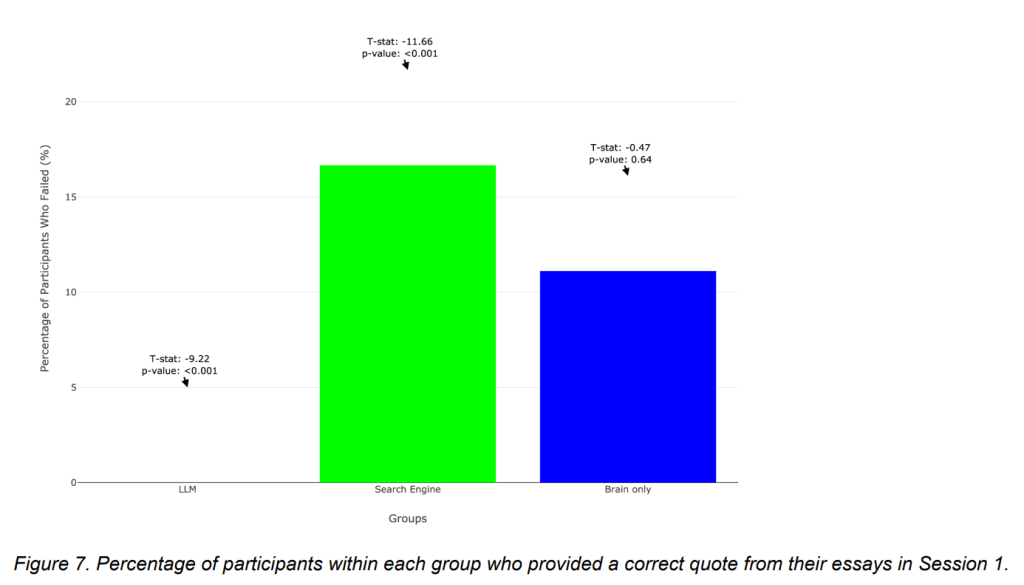

Right after writing, participants were asked to quote a sentence from their essay. In Session 1, 83% of ChatGPT users could not quote, versus 11% in search and 11% in brain-only. Group effects were very large and significant. Zero participants in the ChatGPT group produced a correct quote, versus strong performance in the other two groups. See Figures 6 and 7.

In the crossover, the same pattern held. Seven of nine reassigned ChatGPT users failed to quote. One of nine brain-only writers failed. Both differences were significant.

Perceived ownership also shifted by condition. Brain-only writers overwhelmingly claimed full ownership. Search reported strong but mixed ownership. ChatGPT showed split responses, including several who felt no ownership.

Scoring: Human Teachers vs an AI judge

Two blinded English teachers scored organization, grammar, vocabulary, content, length, uniqueness, and a “does this look LLM-assisted” metric. The team also built an AI agent that acted as a judge. Teachers tended to give lower averages than the AI system, which clustered near a four on a five-point scale. The teachers overwhelmingly gave higher scores to the less assisted writers as it sounded more humane and natural.

What This Does NOT show

The authors caution against claims about permanent harm. The data fita cognitive offloading during LLM-assisted writing, not damaging the brain in any capacity. They recommend structured, hybrid use that keeps core cognitive operations on the student.

Kenneth’s Opinion

As a college student I do not try to avoid AI. It already sits inside coursework, labs, and internships because it is useful. The risk is letting it do the part that builds you. I tell younger mentees at Mount Sinai to try the problem on their own first, even if the attempt is rough. Sketch an outline from class notes. Draft a paragraph or two without help. Then bring in the model to pressure-test the structure, surface gaps, and check the language. Anyone can type a prompt. What sets you apart is the judgment you bring before and after the prompt. Use AI as a tool, not a crutch, and keep your own learning in front.

FAQ

How big was the study and who took part?

Fifty-four students from Boston-area universities completed the main sessions. Eighteen returned for the crossover session. Tasks used SAT-style prompts with a 20-minute writing window.

What exactly is dDTF in simple terms?

It is a frequency-domain method that estimates the direction and strength of information flow between EEG channels. It rests on autoregressive models and Granger causality and lets you ask which regions drive others during a task.

Which frequency bands told the clearest story?

Alpha and theta favored brain-only for internal attention and memory. Beta split by condition, with search showing visual–motor integration and brain-only showing language hubs. Delta favored brain-only during unaided composition.

Did ChatGPT improve writing scores?

Teachers and the AI judge sometimes rated LLM-assisted texts high on surface features, yet teachers often penalized a lack of originality or personal voice. The paper gives both sets of scores and a teacher quote explaining this.

Is the crossover just novelty?

The authors note novelty and load could contribute when brain-only writers first use AI, but prior ChatGPT users still showed weaker engagement when the tool was removed, plus worse recall. Both effects matter for instruction.

Leave a comment